“High-Score Rejection” Controversy from an AC of NeurIPS 2025

-

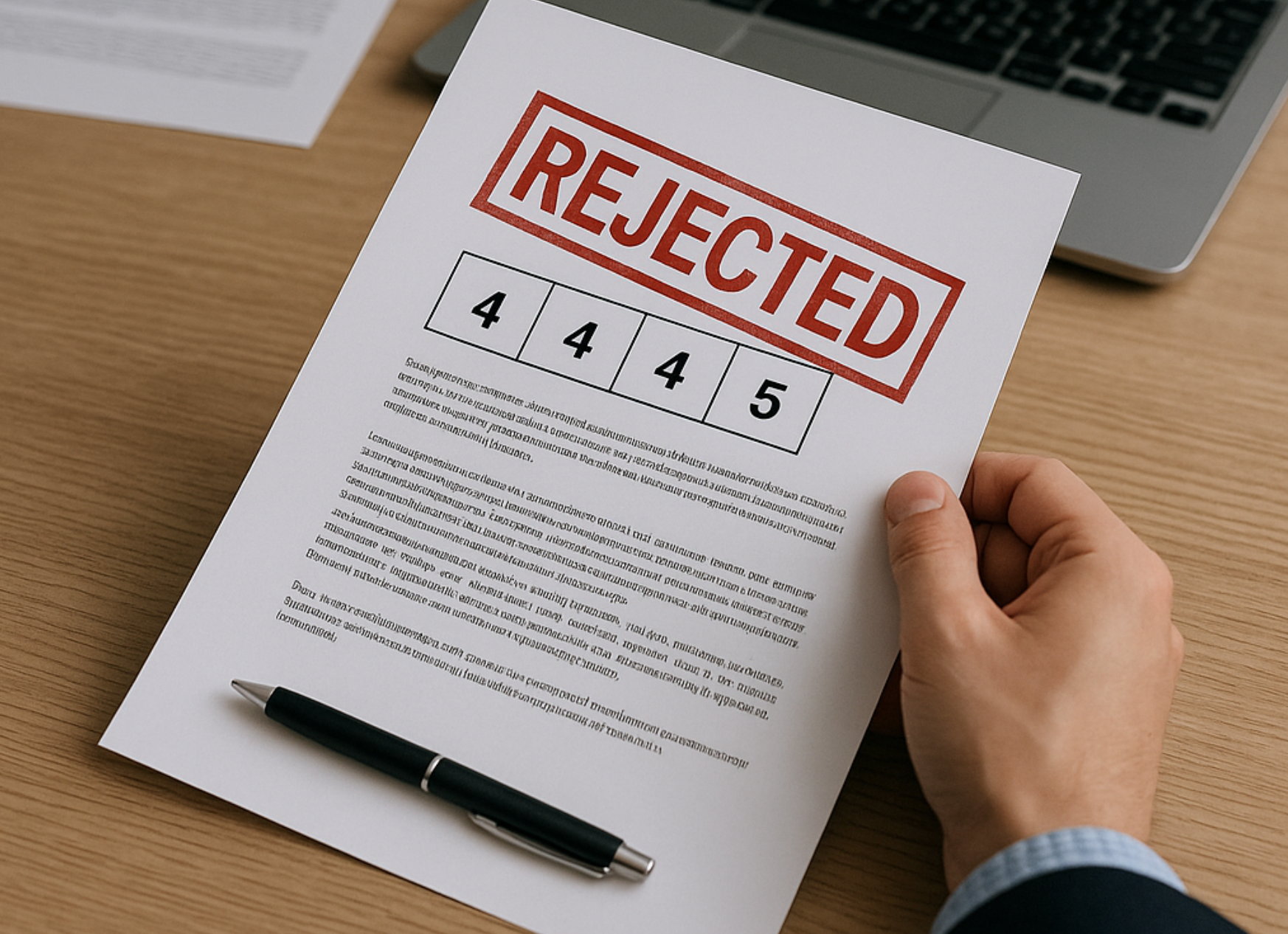

Even papers with scores like 4-4-4-5 are being rejected. Some Area Chairs (ACs) are so concerned that they’ve written to the Program Chairs (PCs), questioning the fairness of the 20–25% fixed acceptance rate.

Here is a story shared by an AC (i.e., "I"/"My") serving NeurIPS 2025:

When High Scores Still Mean Rejection

This year, I served as an AC. To my surprise, and disappointment, I saw papers with positive reviews, consensus, and solid scores still being rejected. The reason? A rigid commitment to a fixed acceptance quota of 20–25%.

The irony is that the author–reviewer discussions actually worked well this year. Misunderstandings were clarified, scores improved, and consensus became more positive. This should have been good news for authors and the community. Yet, even with improved reviews, many such papers ended up rejected — simply because the acceptance quota had already been filled.

Why This Policy Feels Problematic

As ACs, we are caught in a paradox:

- On one hand, we are told to encourage constructive discussions, build consensus, and reward improved clarity and quality.

- On the other hand, even when those discussions succeed, we are forced to reject papers because of numerical limits, not scholarly merit.

In my email to the PCs, I expressed both frustration and confusion:

After the discussions, many papers received higher scores and stronger consensus. Authors felt relieved—they thought their efforts had paid off. Yet ACs still had to reject some of these papers, purely to satisfy a rigid 20–25% quota. This undermines the purpose of constructive dialogue and risks discouraging the community from engaging positively in the future.

The Bigger Question for Our Community

This raises a fundamental question:

Should conferences like NeurIPS continue to enforce a fixed acceptance rate regardless of reviewer consensus?

Or should we move toward a system where each paper is judged on its merits, and acceptance rates naturally reflect the quality of submissions in a given year?Rigid quotas may create the appearance of selectivity, but they also risk demoralizing authors and reviewers alike. Constructive discussions, one of the most praised parts of this year’s review process, lose their meaning if the outcome is predetermined by percentages.

Some thoughts

I believe this is an issue worth serious reflection within our community. If we value transparency, fairness, and genuine scholarly evaluation, we must question whether fixed acceptance rates truly serve our goals.

After all, if a paper that earned 4-4-4-5 after constructive engagement can still be rejected, what message does that send to authors, reviewers, and the future of open, collaborative peer review?

What do you think? Should we hold on to fixed acceptance quotas, or is it time for a change?

What do you think? Should we hold on to fixed acceptance quotas, or is it time for a change?