ICCV 2025 Reviews Are Out: Community Buzz and Discussions

-

The International Conference on Computer Vision (ICCV) 2025 reviews were officially released on May 9th, sparking a wave of excitement, anxiety, and heated discussions across platforms like Reddit and Zhihu. Given ICCV’s prestigious status, the review outcomes naturally triggered diverse reactions from relief and joy to confusion and frustration.

Here's a (incomplete) list of reported community scores:

Scores Confidence Levels Comments and Community Reaction 6,5,4 3,4,4 Strong optimism for acceptance after rebuttal. 5,5,5 5,4,4 Secure accept, cautiously optimistic. 5,5,3 5,4,3 Potential difficulty addressing concerns from the lowest reviewer. 5,4,2 4,3,3 Challenging scenario, yet community suggests trying a detailed rebuttal. 5,3,2 5,3,5 Addressable issues, cautious optimism to flip borderline reject. 4,4,4 3,3,3 Strong borderline accept, community suggests high chance with careful rebuttal. 4,4,3 4,3,3 Commonly reported, seen as cautiously optimistic, critical to flip borderline reject. 4,4,2 4,3,4 / 5,1,3 Borderline situation, community advises aggressive rebuttal especially due to high-confidence weak reject. 4,3,4 4,3,4 Slight optimism, borderline reject might be flippable. 4,3,2 4,4,3 / 2,4,4 Difficult scenario, yet many recommend attempting a rebuttal. 4,2,2 3,2,4 / 4,4,4 Community views challenging; borderline accept reviewer crucial. 3,3,3 3,4,4 Borderline reject across the board, challenging but some optimism with a strong rebuttal. 3,2,6 3,3,3 Extreme variance; controversial among community members, potential for rebuttal impact. 3,2,2 4,3,4 Low optimism; majority community advice is to consider alternative submissions. 3,2,2 4,4,5 Difficult scenario but still attemptable through detailed rebuttal. 2,2,5 3,4,5 Highly variable scores, community strongly recommends a detailed rebuttal. 2,2,2 3,4,4 / 3,3,4 Generally considered unlikely, community suggests withdrawal or major revision for other venues. 2,3,4 4,3,3 Community sees potential for improvement; borderline accept score crucial to uphold. 2,3,2 2,3,3 Low community optimism, many consider redirecting efforts to other conferences. 2,4,4 3,4,4 Moderate optimism, a good rebuttal might secure acceptance. 1,4,5 5,3,2 Very high variance; contentious scenario, difficult rebuttal but still attemptable. 1,1,6 5,5,5 Highly controversial, triggered discussions about reviewer reliability and decision fairness. Interesting Community Highlights

Extreme Variances and Controversies

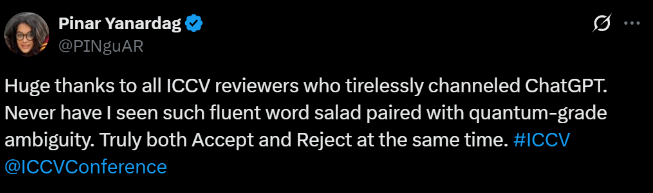

Highly variable scores like

1,1,6sparked intense debate around reviewer consistency and fairness.Rebuttal Strategies

Many researchers announced their intentions to submit strong rebuttals, particularly when borderline or "weak reject" scores (

2) were involved. A widely shared rebuttal guide by Devi Parikh gained popularity within the community.Community Morale and Stress

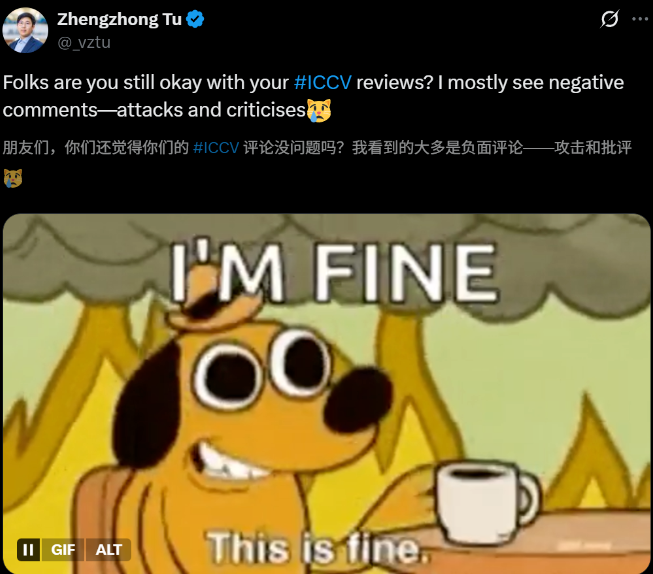

Researchers used humor and memes, including reposting "good luck charm" images like Yang Chaoyue, reflecting the intersection of cultural phenomena and academic stress.

The above image of Yang Chaoyue, often reposted on Chinese social media with good wishes, symbolizes the hope for favorable outcomes amidst stressful events, fitting the sentiments of researchers awaiting ICCV 2025 reviews.Confusion About New Scoring System

ICCV 2025 introduced a new scoring scale:

- 6: Accept

- 5: Weak Accept

- 4: Borderline Accept

- 3: Borderline Reject

- 2: Weak Reject

- 1: Reject

Initially confusing, clarifications quickly circulated across community forums.

Finally

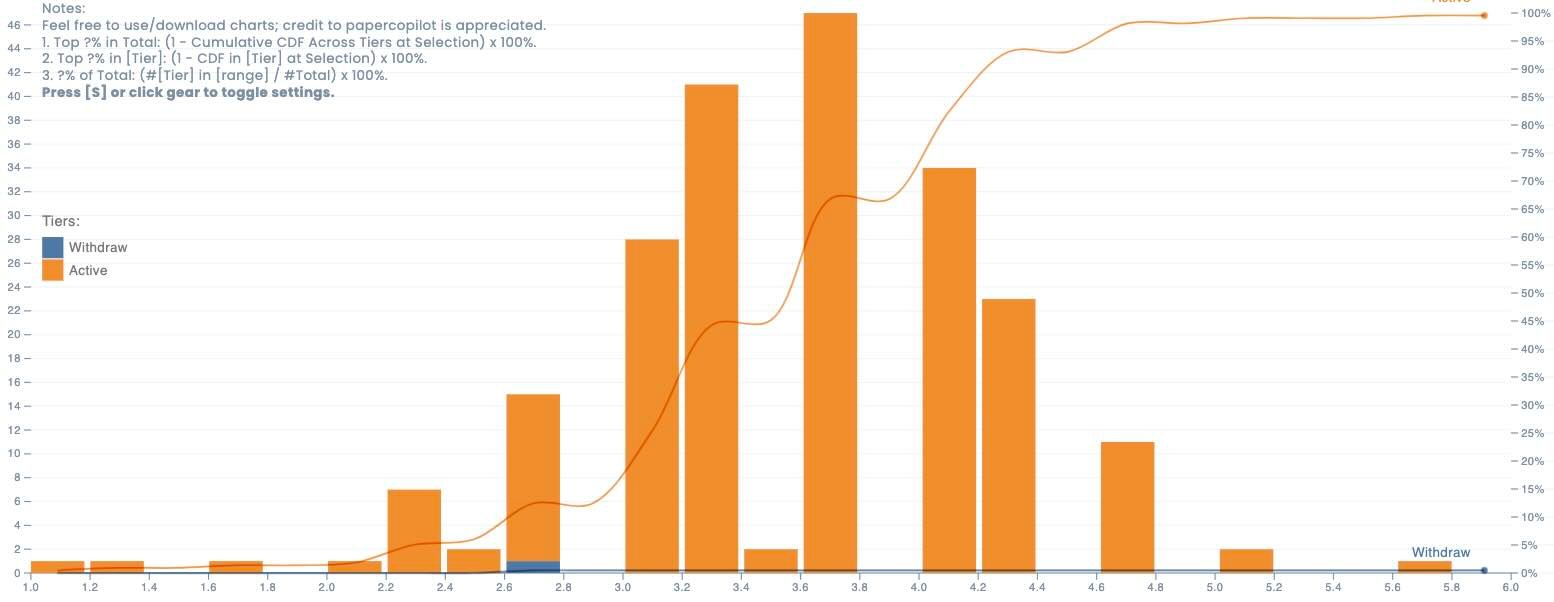

Recommend to contribute and checkout the statistics of ICCV 2025 at Paper Copilot

Good luck with your ICCV 2025 submissions and rebuttals! May your reviewers be fair, your rebuttals persuasive, and your final outcomes positive.

-

Alonzo's ICCV 2025 Journey (and a Bit of CVPR Drama)

This is a story shared by Alonzo on Zhihu platform.

I’m currently a PhD student in Information and Communication Engineering at the University of Science and Technology of China. Let me share a bit about my recent ride through the CVPR and ICCV 2025 review process. Spoiler: it’s been a rollercoaster.

CVPR: Surprisingly Fair (With a Dash of Confusion)

CVPR: Surprisingly Fair (With a Dash of Confusion)Let’s start with CVPR. We received a 333 (three borderline scores), which I honestly felt was fair. Two of the reviewers were pretty responsible and provided solid, constructive suggestions — especially regarding ablation studies. After discussing it with my co-authors, we agreed their feedback made our paper's logic stronger.

What did feel a bit off was the third reviewer’s comment. They said our video-based method resembled an identity-preserving face generation pipeline. Wait, what?

At first, I was confused. I mean:

- These days, if your method involves any kind of "control," it has to resemble ControlNet, right? And if it doesn’t, reviewers ask why it doesn’t follow ControlNet.

- How does a video-based method resemble an image-based pipeline? The logic felt... strained.

Anyway, we rebutted — assuming they might have misunderstood our work. Thankfully, all three reviewers gave a confidence score of 3. One reviewer even promised to raise their score, and did — from 3 to 4.

But alas, the AC didn’t buy it.

The reasoning? That reviewer didn’t actively participate in the discussion phase. So despite the raised score, the paper was rejected with a 433. Fair enough — we still got valuable feedback, and the paper became much better as a result.

ICCV 2025: The Plot Twist Nobody Asked For

ICCV 2025: The Plot Twist Nobody Asked ForWe revised and submitted to ICCV. The new version was stronger in every way. Then came the shocker:

Scores: 2, 3, 4 → Meta: 545 → Rejected.

Let me show you a censored screenshot of one of the reviews. It’s hilariously short.

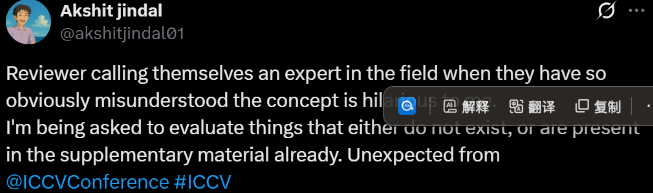

The second one wasn’t much better — maybe 20% longer. But both were rejection reviews. How do you reject a paper with just a few vague lines? Isn’t it basic professionalism that “those who question should provide evidence”?

And let’s not even talk about how ICCV was sending out emails signed as CVPR — including survey emails. That alone chipped away at any impression of professionalism.

Reviewer 1:

Started their comment with “I believe that...” and proceeded to question the effectiveness of a small component in our pipeline. Ended with a score of 2.

Seriously? “I believe”? This felt like that live-stream moment where Da Bing says anyone who starts with “I feel like...” should just be answered with “Sure, whatever you say.”

How do you rebut that? There’s no path to constructive discourse — just a presumption of guilt.

Reviewer 2:

Classic template rejection. First line: memory consumption. Yes, our pipeline has several steps — fair. But we use small models. Some papers have a single base model that consumes more memory than all our components combined.

Also, let’s talk compute efficiency — our method is 8x faster than previous approaches. But of course, that was conveniently ignored.

Frankly, in today’s pretrained model-dominated era, nitpicking about memory consumption feels disingenuous. You used to get criticized for not using large models. Now you do — and they say it’s too heavy.

So, what do you want from us? Pick a side already.

Despite all the drama, I’ve learned a lot. The CVPR round, while not perfect, gave helpful feedback. ICCV, on the other hand, left me disappointed — not because of rejection itself, but due to the lack of genuine engagement in the reviews.

Anyway, the paper’s getting stronger, and we’ll keep pushing forward.

Onward.

-

I have to say, the reviewers I got this time were actually pretty solid, even though the scores weren’t exactly high (lol).

What stood out was how they pointed out the exact weaknesses I’ve always felt uneasy about; and they didn’t just stop there. They actually suggested a bunch of potential solutions, and the experiments they recommended were super clear and actionable.

The only catch? Just one week to run everything. Might be a tight squeeze.

All I can do now is give it my best shot.

-

As of the review deadline, all 11,152 valid submissions to ICCV 2025 have received at least 3 reviews each. Authors can now submit rebuttals until May 16, 11:59 PM (HST).

Final decisions will be announced on June 25.

Final decisions will be announced on June 25.

- Are you planning to submit a rebuttal?

- What do you think about the review quality this year?

- How does ICCV scoring compare to CVPR or ECCV in your experience?