Q&A: How Deterministic Is the Review Outcome for the Same Paper?

-

One frequently asked question from our users is:

"How deterministic is the review result for the same paper?"

While we strive to maintain coherence and stability across different review requests for the same submitted paper, it is important to acknowledge that we cannot guarantee 100% alignment in the review outcomes. This is due to several factors:

- Stochasticity of LLMs: Despite setting the temperature to a low value, inherent randomness in LLMs can still cause slight variations in outputs.

- Dynamic Related Work Search: The ranking method considers not only content similarity but also public web impact, which evolves over time.

- LLM Sub-version Updates: Occasional updates to the underlying LLM can subtly affect generation patterns.

- Prompt Tweaks and Fixes: Based on benchmarking feedback, we continuously improve our review prompts, which may influence review content across different runs.

However, we do not consider this variability a flaw. In fact, we encourage users to submit multiple review requests for the same paper. Here's why:

- You can identify recurring themes and feedback points that consistently appear, which are more likely to be critical for paper improvement.

- You can deprioritize less consistent feedback, which might stem from minor LLM variance or shifting context. However, have a wider spectrum of points can help you better prepared on "surprises".

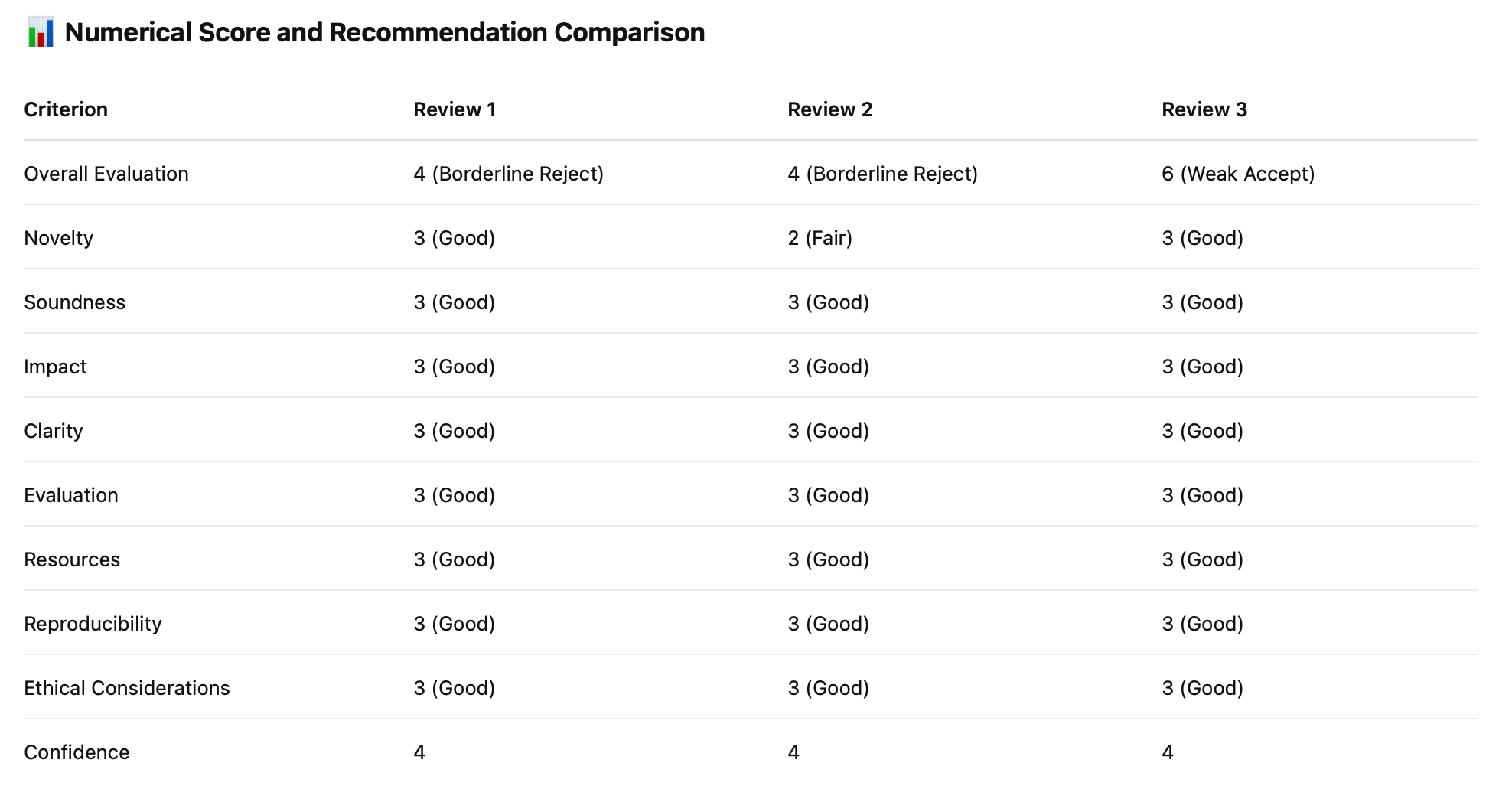

- You can compute simple statistics on the ratings/scores. For example, here is a summary table from reviewing the same paper three times under the same conference setting:

As illustrated above, the review results are largely consistent, with the "Overall Evaluation" leaning more toward "4 Borderline Reject" than "6 Weak Accept."

And, in this specific case, one might argue that Reviewer 1 failed to align sub-scores with their final decision. As part of our roadmap, improving the alignment between sub-scores and overall scores will be one of our key areas of focus.

We hope this clarifies how our system works and why slight variability in reviews can be both expected and valuable.

-

Thanks for clarifying this, very helpful!