NeurIPS 2025 Review Released: Remember, a score is not your worth as a researcher!

-

"In every peer review cycle, some will win, some will lose, and most will need a group hug."

— Every CS author in late July

The Reviews Have Landed — Now What?

The Reviews Have Landed — Now What?

Today, the NeurIPS 2025 reviews have dropped for both the Main Track and Datasets & Benchmarks Track. Congratulations, commiserations, and caffeine to all! Whether you’re celebrating 6s or nursing some bruising 2s, the next week is your time to shine (or, at least, to clarify, correct, and convince).

Rebuttal period basics:

- Preliminary reviews are now available in your Author Console.

- You have until July 30th, 11:59pm AoE to submit your rebuttal.

- Reviewers see your responses starting July 31st.

- Discussion with reviewers: July 31st – August 6th, also AoE.

Golden Rules for Rebuttal:

- Be factual, focused, and respectful. Address factual errors, direct reviewer misunderstandings, and answer questions — don’t vent or include new experiments (save those for your next revision).

- Do not include: links to external pages, identifying information, or anything that violates the double-blind policy.

- If you get a low-quality, disrespectful, or inaccurate review: Use the “Author AC Confidential Comment” — but only for real issues, and proofread carefully.

- Public release: For accepted (and opt-in rejected) papers, your reviews and rebuttals will eventually go public. So, don’t say anything you wouldn’t want your PI, future employer, or your mom to read.

Pro tip: Don’t treat the average score as a crystal ball. ACs care more about review content and discussion than numbers alone.

Score Situation: What Are People Seeing?

Score Situation: What Are People Seeing?This year, review scores feel lower than ever. Even the “inflated” public polls are showing a stark drop.

“Even with some upward bias in online voting, the proportion of papers with an average score below 3.5 is shockingly high. The days of 4 = borderline are over; now, 3 is the median.” — Xiaohongshu user

From the Xiaohongshu/WeChat stats (see image below in your post):

Score Range Number of community votes [4.33, 6] 117 [3.67, 4.33) 222 [3, 3.67) 324 [2, 3) 110 Interpretation:

- The most common range is [3, 3.67) — a “borderline” area that leaves authors anxious and ACs with tough choices.

- Less than 20% of authors are reporting means above 4.33.

Social media stories are awash with:

- Scores like 4/4/3/2 (“Should I rebut?”), 5/3/2/2, 4/4/4/2, or the heartbreaking all-2s.

- Anxiety about outlier reviewers (especially confident low scores).

- A common refrain: if your average is near 3, don't lose hope — but prepare a strong, factual rebuttal.

Reddit, Zhihu, and WeChat are full of batch statistics:

- “Chair total 14: 1 over 4.0, 4 between 3.0 and 4.0, 9 at or below 3.0.”

- “Scores 5/4/3/2 with one harsh but easily rebuttable review — do I have a shot?”

- Senior AC on X: “Out of my 100-paper batch: 1≥5.0, 6≥4.5, 11≥4.0, 25≥3.75, 42≥3.5. Naive cutoff for acceptance: ~3.75.”

In short:

- 3–4 is the new “limbo.”

- ≥4.5 is relatively rare, and usually a good sign.

- Outliers (both low and high) abound, and the rebuttal can make a difference if you’re close to the line.

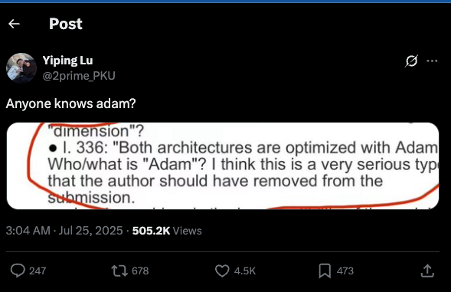

Collected Score Table — 2025 Social Media Reports (up till when this article is written)

Collected Score Table — 2025 Social Media Reports (up till when this article is written)Here's a summary table of self-reported scores and cases seen on Chinese forums, Reddit, and WeChat:

Score Pattern Track Commentary 4/4/4/5 D&B/Main Excellent, but still nerves about outliers 5/3/2/2 Main Mixed; single strong positive, but two low (see if rebuttal helps) 4/4/3/2 Main Very common; hope if the “2” can be addressed 3/3/3/3 Main/D&B “Is this death?” — Actually, still possible with strong text 4/4/4/2 D&B Confident negative reviewer; focus rebuttal there 4/3/3/3 Main Borderline; ACs will look closely at review text 5/4/3/3/2 Main 5-reviewer paper; chance if low scores are weakly justified 2/2/2/2 Main Unlikely, but never zero hope 4/5/2/2 Main Outliers matter; address negatives, emphasize positives 4/3/2/5 Main Again, a split batch — rebuttal is key 3/3/4/4 Main With reviewer hints of raising score, good chance Trends from these numbers:

- Most papers cluster between 3.0 and 4.0.

- It’s rare but not impossible to get four “strong accepts.”

- Negative reviews (scores 1–2) are often outliers, sometimes given with high confidence, but not always fatal if addressed with a clear, factual rebuttal.

- “Rebut or run?” is the most common question, and the answer is: if you are above 2.5, you should rebut unless all reviewers are negative with strong justifications. The bar for “safe accept” is much higher than before.

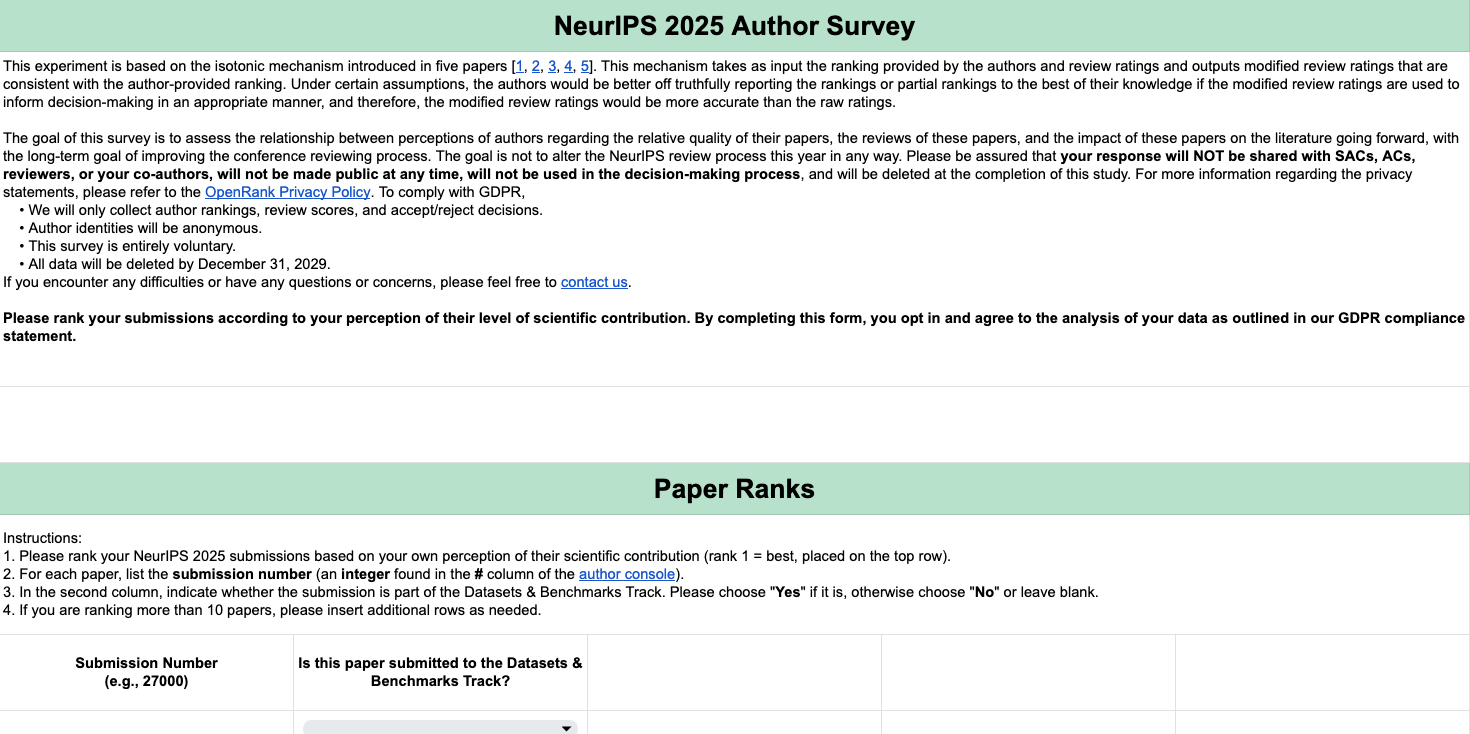

🧐 The Author Survey — And Why It Matters

NeurIPS 2025 has rolled out an optional survey asking authors to rank their own submissions. This isn’t a trap — it’s part of a longer-term research initiative to improve the peer review process, not to influence this year’s outcome.

How does it work?

- You’re asked to honestly rank your own submissions by their scientific contribution.

- The survey explores the relationship between author perceptions, review scores, and future impact.

- The ultimate aim? To find out whether author-informed rankings can improve the reviewing and decision-making process, leveraging insights from the isotonic mechanism.

Privacy note: Your response is completely anonymous, not shared with ACs or reviewers, and will be deleted after the study finishes.

Echoing the CSPaper Mission

This is very much aligned with the mission of CSPaper Review:

- Bringing transparency and feedback early in the process

- Experimenting with ways to make reviewing more fair, consistent, and author-centric

- Building a community that shares insights, pain points, and best practices

CSPaper Review already served 7,000+ unique users, processed 15,000+ reviews, and directly addresses the exact kind of reviewing bottleneck and author pain the community faces right now.

Why Try CSPaper Review? A New Perspective

Why Try CSPaper Review? A New PerspectiveIf you’re feeling battered by NeurIPS reviews — or just want a second opinion for your next round (ICLR, AAAI, EMNLP...), CSPaper Review offers:

- Fast, conference-specific peer reviews (within 1 minute)

- Benchmarked realism (using real review data from OpenReview/social media)

- Feedback on where your paper might stand in a different venue

- Useful for diagnosing common reviewer objections, clarifying writing, or testing rewrites before next submission

- Free to use (up to 20 reviews/day), no login required

Remember, tools like CSPaper Review won’t change your current NeurIPS fate, but they can help diagnose weaknesses, frame your rebuttal, and plan for future resubmission or revision.

CSPaper Team is also keen to learn how our tool matches the reviews you obtain, we welcome you to share with us or the community by emailing us support@cspaper.org or create a post here!

End Words: Keep Calm, Rebut Wisely, and Stay in the Game

End Words: Keep Calm, Rebut Wisely, and Stay in the GameTo everyone feeling crushed, furious, or numb: You are not alone.

- Most people’s scores are “mid.”

- Outliers are everywhere.

- The review text matters more than the mean.

- “Borderline” is the new normal.

Do your best rebuttal — factual, concise, and polite.

Discuss with your co-authors, check the CSPaper Review tool for a fresh look, and don’t be afraid to ask your community for support or advice.

And remember: a score is not your worth as a researcher.May the review gods be with you. May your scores rise, and may you touch grass before the notifications drop.

— The CSPaper.org team

Now, go forth and rebut. Or, at least, go outside for five minutes — you earned it.

-

As a reviewer, I got messages from OpenReview about authors withdraw their submission upon seeing the reivew scores. The two withdrawn papers have scores as follows:

4,3,3,3

4,3,3,2I guess, mean score >=4 would be a good spot for a chance of acceptance? anything below could be kinda far from acceptance?

-

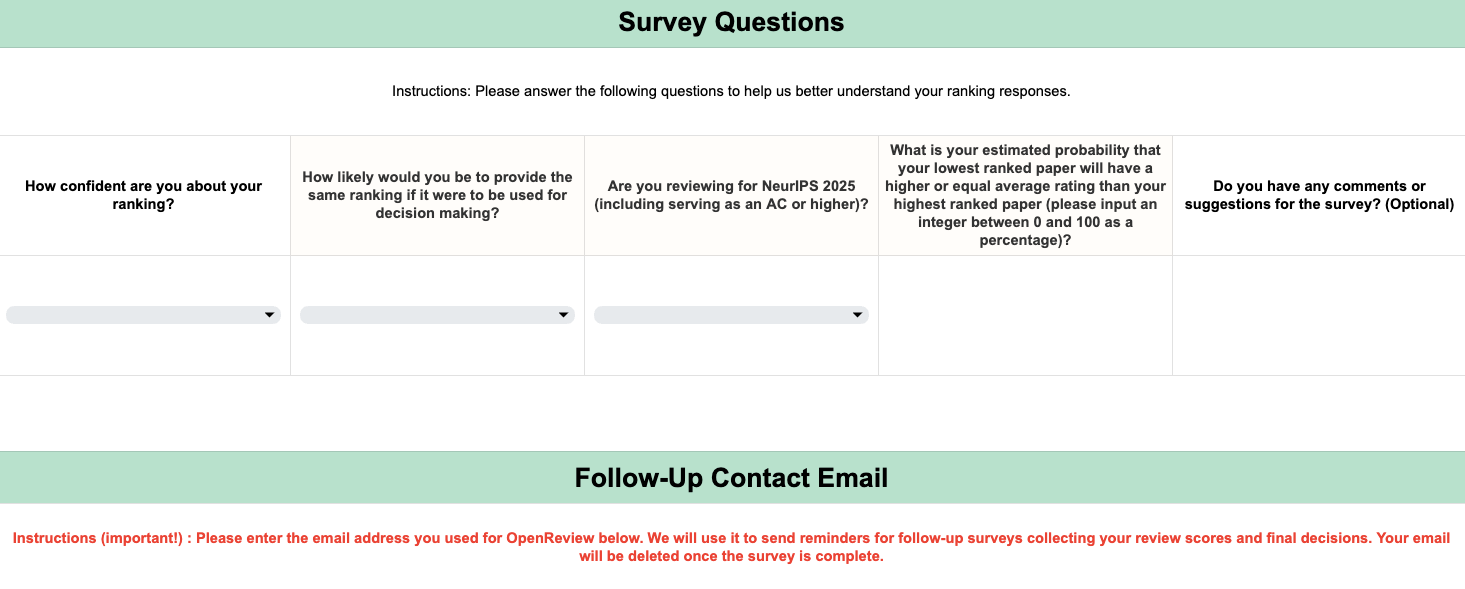

Who’s Adam? The Most Ridiculous NeurIPS Review Is Here

Who’s Adam? The Most Ridiculous NeurIPS Review Is Here

Have you received your NeurIPS 2025 review results yet? Brace yourselves—it’s officially open season for venting frustrations about reviewer comments!

Right on cue, we stumbled upon what might just be the funniest and most outrageous NeurIPS comment of the year. Credit goes to Yiping Lu, an assistant professor in Northwestern University’s Department of Industrial Engineering and Management Sciences and a proud Peking University alum.

Lu posted a screenshot of this legendary review on X (formerly Twitter), and it immediately went viral—over 505,000 views in less than a day! Make your guess is this review from LLM?

The Reviewer’s Baffling Comment

The Reviewer’s Baffling Comment

Line 336: “Both architectures are optimized with Adam.”

“Who/what is ‘Adam’? I think this is a very serious typo that the author should have removed from the submission.”Yes, folks, you heard it correctly. The reviewer, presumably an expert, genuinely believed “Adam”—one of the most fundamental optimization algorithms in machine learning—was either a typo or possibly a mysterious colleague named Adam.

Community Roast

Community RoastEven Dan Roy, a respected professor and machine-learning researcher, couldn’t resist firing a shot:

“NeurIPS reviews are complete trash.”

🧨 The Deeper Issue: Quantity vs. Quality

With NeurIPS submissions skyrocketing toward 30,000 papers this year, it’s becoming glaringly obvious that human reviewers alone can’t handle the load. This mismatch between submission volumes and reviewer capacity inevitably leads to bizarre review outcomes like the infamous “Adam incident.”

Could AI Save Peer Review?

Could AI Save Peer Review?AI tools are already creeping into academic peer review. UC Berkeley postdoc Xuandong Zhao recently tweeted:

“#NeurIPS2025 reviews are out, and the authenticity of reviews surprises me again.Two years ago, maybe 1/10 felt AI-assisted. Now? It seems 9/10 are AI-modified, going beyond simple grammar fixes to fully generated comments.

As someone researching AI-generated content detection, these might be impressions rather than hard data. Still, this trend worries me: AI writing papers, AI reviewing them, AI publishing them. Is this really the future of academia we want?”

From drafting manuscripts to reviewing and publishing, AI now permeates the entire academic pipeline.

What Now? It’s Rebuttal Season!

What Now? It’s Rebuttal Season!As funny as this review is, it’s still critical to tackle it seriously in your rebuttals. Thankfully, one helpful community member shared an invaluable resource from 2020:

Final Note

Final NoteDon’t forget to share your story by contributing to our cspaper.org!

-

NeurIPS Author-Reviewer Discussion: Key Points

-

Purpose:

Only clarify reviewer questions. Do not add new arguments or extensive commentary. -

How to Respond:

- Use the “Official Comment” button for each discussion thread.

- Be brief and to the point.

- Do not ask or urge reviewers to reply; ACs/PCs will manage that.

-

Confidential Comments:

- Use “Author AC Confidential Comment” for private notes to ACs (not visible to reviewers).

- Available until Aug 6.

-

Anonymity & Conduct:

- Do not reveal your identity or use links.

- Remain respectful at all times.

-

Timeline:

- Discussion closes Aug 6, 11:59pm AoE.

- Reviewer final ratings/justifications are hidden until notification.

- Save reviews before Aug 6 if you plan to withdraw.

-

Navigation:

Use filters in OpenReview to view messages by author/reviewer/AC.

-

-

Author-Reviewer discussion is extended!

- Author-Reviewer discussion is extended by 48 hours, now closing on Aug 8, 11:59 PM AoE.

- AC-Reviewer discussions remain unchanged and will conclude by Aug 13.

- If a reviewer hasn’t replied to your rebuttal, feel free to initiate discussion and tag your AC for support.

- Even if a reviewer has marked “Mandatory Acknowledgement,” you’re encouraged to continue engaging if open questions remain.

- Late rebuttals (posted entirely after Jul 30) will be ignored to ensure fairness.

- You won’t see updated ratings until decisions are released. Reviewers can still express if your rebuttal was helpful.

Good luck and happy discussing!

-

Hey folks,

This is a quick but important reminder from the NeurIPS 2025 official email for everyone reviewing

It lists some patterns emerge that could lead to red flags and future consequences for reviewers. Let's break it down.

TL;DR – Don’t Click and Ghost

TL;DR – Don’t Click and GhostClicking “Mandatory Acknowledgement” does NOT excuse you from discussion participation.

- Reviewers must actively participate in author discussions before clicking the acknowledgement button.

- Those who don’t engage will be flagged by Area Chairs (ACs) and the system.

- Such flags could lead to penalties under NeurIPS' Responsible Reviewing policy and may impact future invitations.

- Late rebuttals (posted in full after July 30) are to be ignored.

- Author-reviewer discussions have been extended to Aug 8, 11:59pm AoE. AC-reviewer discussions end Aug 13.

Red Flags to Avoid

Red Flags to AvoidThese are specific behaviors being monitored; and strongly discouraged:

Clicking "Mandatory Acknowledgement" but

Clicking "Mandatory Acknowledgement" but  not posting anything in the discussion thread

not posting anything in the discussion thread Ignoring rebuttals or failing to respond to author comments

Ignoring rebuttals or failing to respond to author comments Waiting until the very last moment to engage (or not engaging at all)

Waiting until the very last moment to engage (or not engaging at all) Giving vague positive feedback in discussion but a harsh final justification

Giving vague positive feedback in discussion but a harsh final justification Silent rating changes without explanation or communication

Silent rating changes without explanation or communication Assuming low-scored papers don’t deserve replies (they do!)

Assuming low-scored papers don’t deserve replies (they do!)

What You Should Do Now

What You Should Do Now- Log in to OpenReview

- Read all rebuttals and comments

- Post a reply, even if your position hasn't changed:

- If concerns are resolved: say so.

- If not resolved: explain why.

- Ask clarifying questions if needed.

- Keep your final justification consistent with your public discussion.

- If you're unsure whether you need to respond, ask your AC.

When Is It OK to Submit “Mandatory Acknowledgement”?

When Is It OK to Submit “Mandatory Acknowledgement”?Only when you have:

Read the author rebuttal

Read the author rebuttal Engaged in the author-reviewer discussion

Engaged in the author-reviewer discussion Completed your final justification and updated your rating (if needed)

Completed your final justification and updated your rating (if needed)

️ Responsible Reviewing Is Active

️ Responsible Reviewing Is ActiveNeurIPS 2025 is running its Responsible Reviewing initiative:

- ACs can flag reviewers for lack of participation

- Negligent reviewing may impact your own submissions

- A record of poor reviewing will be maintained for future years

🧠 Final Thought

Let's treat authors with:

- Fairness

- Politeness

- Calmness

- Attention

- Focus on scientific merit

Whether you're supporting a paper or recommending rejection, a few sentences go a long way in building a healthy review culture.

-

This Year’s Community Observations

- Reviewer Silence: Most authors receive little to no real engagement — sometimes only an MA.

- Score Disappearance: If a reviewer updates their rating/final justification, their score vanishes from the author console until decisions.

- Community Frustration: Many feel the “discussion period” is a one-way street; reviewers rarely debate or clarify further.

- Randomness: Even with identical scores, some papers get in, others don’t. “AC lottery” and “reviewer lottery” are real!

- Field Differences: Some subfields have lower average scores; a 4.0 may be top-20% in a tough area, borderline in others.

Exhaustive Table: Score Scenarios & Acceptance Hope

Below is a table constructed from real examples in the community (Zhihu, Reddit, etc.).

Case Initial Scores (C=Confidence) Actions During Rebuttal What Happened After Community Analysis Acceptance Hope A 5,5,4,3 One 4 promised to increase; 3 is silent Avg. visible 4.67 or 4.0 (if one vanishes) 4+ avg with one “up” and no strong negative Very good chance B 5,4,4,2 2 was highest confidence; author tried to convince; 4 promised up Score vanishes after “up”, avg. jumps if visible If both “up,” strong chance. If 2 stays, borderline; depends on AC C 4,4,4,4 All 4s; only MA or silence Avg 4.0 No reject, but no clear “champion.” Depends on AC and field bar 50–75% (“bubble”) D 5,5,3,2 (C: 3,3,5,3) Tried to “fix” the 2; 3 was picky Only MA; avg 3.75/4.0 Negative champion can kill it if not convinced; AC matters Risky but possible if AC sympathetic E 3,3,4,5 4 promised “up”; 3 did MA Avg jumps (3.75–4.0) If “up” confirmed, likely to be accepted, esp. if 3 is no longer counted Good odds if “up” happens F 3,4,4,4,5 4 said updated; 5 silent Avg 4.2 Most positive, one “update” is good Likely accept if no major issues G 3,3,3,4 Only one 4, rest 3s, some silence Avg 3.25 Borderline or below, need at least one “up” Unlikely unless field has very low average H 4,4,3,3 4s MA, 3s silent Avg 3.5–4.0 Borderline, need at least one 3 to up True “bubble”—AC can go either way I 5,4,3,3 (C: 2,1,4,4) Both 3s high confidence; all silent Avg 3.75 Weak, but not hopeless. AC might tip if rebuttal strong. 30–50% J 5,5,5,4,3 4 says will up, 3 silent Avg >4 Excellent if 4 moves up, still good if not K 4,4,3,2 All silent Avg 3.25 Very hard; needs miracle up-vote from 2 or 3 Unlikely L 5,4,3,2; up to 5,5,3,3 After rebuttal all up by 1 Avg now 4 If real, strong, but only if up-votes stick M 3,5,5,2 Tried hard to move 2, 5 says up If avg now 4+, possible Must move 2 or AC must ignore 40% N 4,4,4,3 All MA, no comments Avg 3.75 Bubble, depends on AC or field O 2,3,5,5 3/2 not responding, 5/5 happy Avg 3.75 If no “up,” borderline; depends on AC and field 30–60% P 3,4,5,5 Some up, some down Avg 4.25 Good if up, depends if 3/4 “negative champion” Q 2,2,2,2 Submitted rebuttal anyway Avg 2 “No hope” zone R 4,3,5,5 3 not convinced, 5/5 positive Avg 4.25 Good odds, but 3 can kill if AC is negative S 3,3,4,4 (C: 4,4,3,3), all said “up” All promise up, scores vanish Should be 4/4/4/4+ Very strong, likely to be accepted T 4,4,4,5 MA only, no real engagement Avg 4.25 No negative champion, likely safe U 5,4,4,2; 2 up to 3, 4 to 5 Scores vanish, avg >4 Strong, very likely accept V 5,4,4,3; MA from 4s, 3 silent Avg 4.0 Borderline, need at least one “up” W 5,4,3,2; 2 still visible after MA 2 unchanged Low chance unless AC overrules X 3,4,4,4 3 says “thanks, still weak” Avg 3.75 Need 3 to up, else risky Y 3,3,3,4,4 All 3s, one 4 Avg 3.4 Very difficult Z 5,5,4,2 2 is negative champion Avg 4 On the line; needs AC intervention

What the Community Says

- Silence ≠ Rejection: Most reviewers do not reply, especially to borderline or mid-range scores. Don’t panic!

- MA Means Finalized: Once you see “Mandatory Acknowledgement,” you can’t see the score if they updated it, but they may have changed it up or down.

- Upvotes = Hope: Any reviewer explicitly saying “I am raising my score” is good; if the score vanishes, assume they did.

- Downvotes Happen: Occasionally, a reviewer will lower after rebuttal; this is rare but possible if the rebuttal is weak or reveals flaws.

- “Negative Champion” Danger: A single reviewer (usually the lowest) with high confidence can block a paper if not convinced. Always try to address their points specifically.

Area Chairs (AC) Role

- ACs often have leeway in borderline cases, especially if rebuttal is strong or all concerns are addressed except one.

- If all reviewers are “neutral” (3s and 4s), and no strong negative, ACs may push for acceptance, especially if field is tough.

- Community advice: if you have real engagement from at least one reviewer, and no strong rejections, odds are decent.

Finally

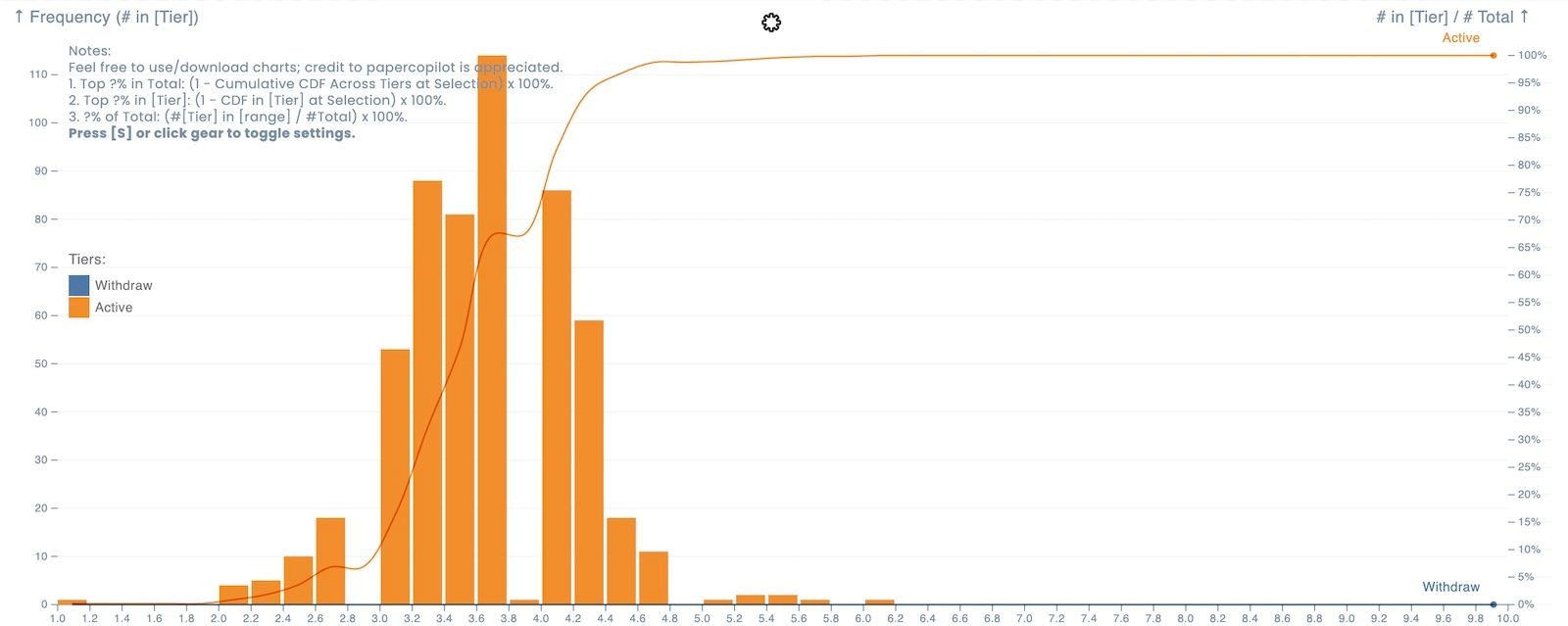

Here are the main takeaways from recent statistics (see screenshots):

- Acceptance Rate: NeurIPS acceptance rate has hovered around 25% for the last five years (see Paper Copilot NeurIPS 2025 statistics).

- Score Distribution: Most “active” (not withdrawn) papers have initial means in the 3.0–4.2 range.

- Community feedback is consistent with these tools: avg. 4+ is usually "safe", 3.5–4.0 is a coin toss, <3.5 is very unlikely.

- Confidences help: 4s and 5s from high-confidence reviewers are

-

D&B track Reviewer-AC Discussions Guide:

TL;DR: Discuss borderline papers with your AC and take all the feedback (including the Author-Reviewer discussions) into consideration.

Please note the following:

-

When asked by the AC (please check the OpenReview forum for papers in your batch), and please discuss (if you have not done so yet) any paper in question with your AC.

-

If you are one of the few reviewers who have not reacted yet to rebuttals, please do so immediately and note that you might have not given authors the sufficient opportunity to defend their position. Similar holds true if you asked time-consuming questions a few hours before the end of Author-Reviewer discussions. Please be understanding in such cases.

-

Please note you will be required to confirm (by pressing a “Mandatory Acknowledgement” button) that you read the reviews, participated in the discussions, and provided final feedback in a dedicated “Final Justification” text box and updated “Rating” accordingly before the end of reviewer-AC discussion (before Aug 13). Failure to do so will be flagged as irresponsible behavior.

-

Kindly remember for any irresponsible, insufficient or highly problematic reviews (including but not limited to possibly LLM-generated reviews) and problematic reviewers, the AC will be able to flag them up during the Metareview stage. These scores will be used by PCs to justify desk rejection of submissions of the most negligent reviewers. Once again, we thank you for your understanding of our responsible reviewing initiative: https://blog.neurips.cc/2025/05/02/responsible-reviewing-initiative-for-neurips-2025/

Timeline (AoE) for the next few weeks:

-

Author-Reviewer Discussions (July 31 - Aug 8): Passed.

-

Reviewer-AC Discussions (Now - Aug 13): During this second phase, please discuss the paper, the reviews, and the author responses with the AC and Reviewers. Where reviewers have not done so yet, they must enter “Final Justification” and update “Rating” by pressing on your review “Edit->Review Revision” button (before Aug 13). “Rating” changes are not visible to authors until paper decisions are made visible.

-

Metareview Discussions (Aug 14 - Aug 20): During this last phase, the area chairs will be writing their meta reviews and may elicit further comments and clarifications from reviewers and SAC.

-

ACs make initial accept/reject recommendations with SACs: Aug 21 - Aug 30.

-

Notification: September 18, 2025.

-

-

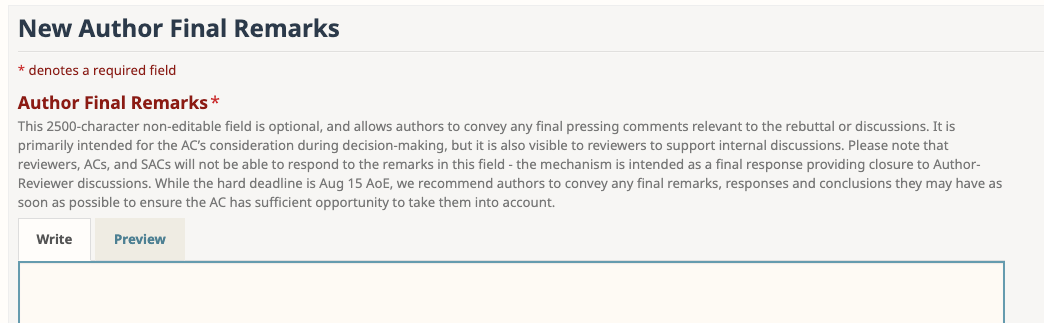

For main track, authors have a final chance to add their remarks

Dear Author(s),

To facilitate the authors’ response to any final questions and comments from the reviewers, we have decided to provide an “Author Final Remarks” response mechanism.

This 2500-character non-editable field is optional, and allows authors to convey any final pressing comments relevant to the rebuttal or discussions. It is primarily intended for the AC’s consideration during decision-making, but it is also visible to reviewers to support internal discussions. Please note that reviewers, ACs, and SACs will not be able to respond to the remarks in this field - the mechanism is intended as a final response providing closure to Author-Reviewer discussions. While the hard deadline is Aug 15 AoE, we recommend authors to convey any final remarks, responses and conclusions they may have as soon as possible to ensure the AC has sufficient opportunity to take them into account.

Sincerely,

Program Chairs: Nancy Chen, Marzyeh Ghassemi, Piotr Koniusz, Razvan Pascanu, Hsuan-Tien Lin;

Assistant Program Chairs: Elena Burceanu, Junhao Dong, Zhengyuan Liu, Po-Yi Lu, Isha Puri