When SSIM Hits 7.0: A Peer Review Caution from ECAI'24

-

In the world of computer vision, especially within style transfer and image generation research, evaluation metrics like SSIM, FID, and PSNR are household names. Most early-stage researchers know their significance and limitations. Yet, what happens when an SSIM score (by definition bounded between -1 and 1) suddenly clocks in at 6.913?

Welcome to a strange episode that unfolded during ECAI 2024, where a paper titled "Progressive Artistic Aesthetic Enhancement For Chinese Ink Painting Style Transfer" made waves — not for its aesthetic beauty, but for the metrics behind it. This case offers a rare and valuable lesson on peer review rigor, the dangers of metric misuse, and the cultural dynamics surrounding academic integrity.

The SSIM incident

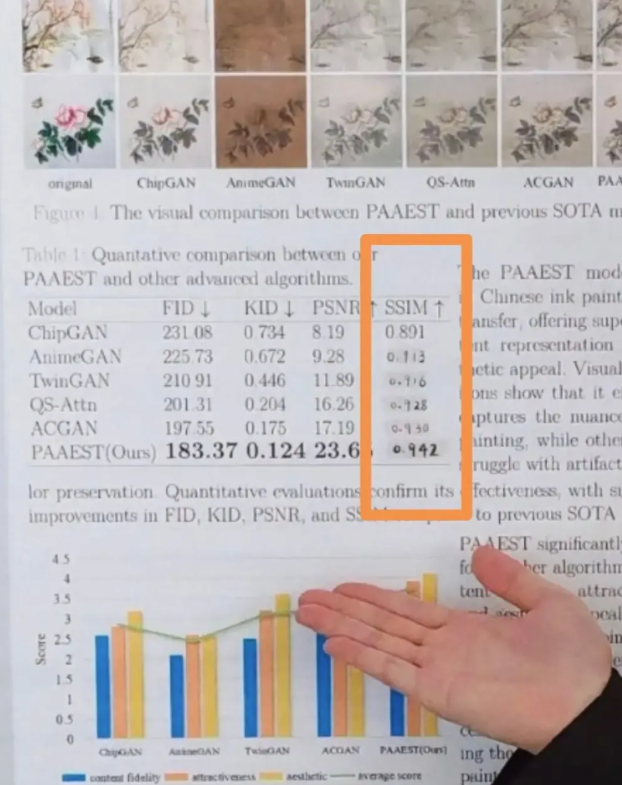

On the surface, the ECAI paper presents an image style transfer method for Chinese ink painting, showcasing results across ChipGAN, AnimeGAN, TwinGAN, and others. But buried in Table 1, one thing stood out: the SSIM value of the proposed method, PAAEST, reached 6.913, with other entries well above 1 too.

For the uninitiated:

SSIM (Structural Similarity Index Measure) is a metric that ranges from -1 to 1, where 1 indicates perfect similarity between two images. Values beyond that are simply... mathematically impossible.

This anomaly didn't go unnoticed. A sharp-eyed peer reviewer or attendee at the conference (depending on the timeline) questioned it. What followed was an unusual and public unraveling.

From inflated metrics to white-out corrections on poster

Photos surfaced on XiaoHongShu, showing the author standing next to his ECAI poster — except something was odd. The original SSIM values on the poster were visibly whited out with correction fluid and rewritten to more "reasonable" values like 0.9. This quick fix raised more red flags than it resolved.

Soon, further inconsistencies were discovered:

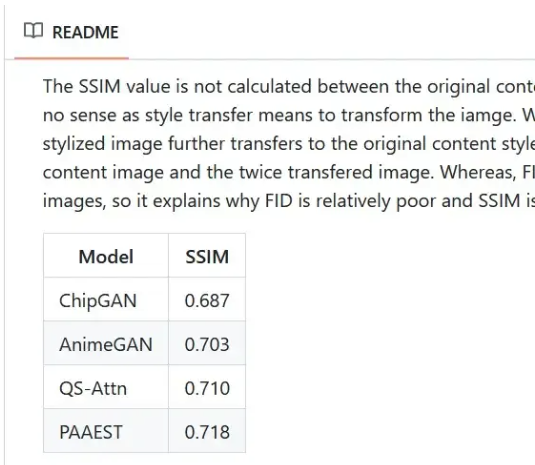

- The updated values posted later on GitHub were again changed, this time to ~0.718.

- The explanation given was that SSIM was mistakenly calculated between stylized images and the original style image (not the content image) violating evaluation conventions.

- The same author had also published 10+ first-author papers within a single year across unrelated fields: AI safety, economics, vehicle design, even poetry generation.

The broader peer review implications

This story exposes cracks in the peer review system that many early-career researchers must confront:

1. Review of evaluation metrics

Too many papers get by with shallow metric reporting. Reviewers may skim tables without questioning feasibility. The SSIM=6.913 case is a cautionary tale: if you're reviewing and see anything off, speak up.

2. Quantity over quality?

An undergraduate publishing 10+ solo papers spanning NLP, CV, GANs, and econ within a year is not just impressive; it raises credibility concerns. True interdisciplinarity is rare and usually collaborative. Solo authorship across so many fields invites scrutiny.

3. The Dangers of non-open research

None of the author's papers were open-sourced. Claims of SOTA (state-of-the-art) went unverified. If the code isn't public, replication is impossible. This contradicts the spirit of scientific inquiry.

Lessons

This isn’t just gossip. It's a systemic warning.

For early-stage researchers:

- Know your metrics deeply. If you use SSIM, FID, or PSNR, understand their math, and use them properly.

- Be transparent. If you make a mistake — own it. Quietly applying white-out on a poster isn’t a correction; it’s a cover-up.

- Focus on depth over breadth. The temptation to churn out papers can lead to shortcuts that damage your long-term credibility.

For reviewers:

- Don't skim. Even something as small as a numeric inconsistency can signal much deeper issues.

- Advocate for open code and reproducibility, especially when SOTA is claimed.

- Peer review is not just gatekeeping. It’s a communal responsibility to uphold scientific integrity.

Conclusion

The SSIM 7.0 case might become a meme, but it points to a much more serious problem: the erosion of academic standards in the face of superficial productivity. Whether it's due to publication pressure, competitive internship applications, or blind ambition, these cases will only grow unless we build a stronger culture of accountability, mentorship, and technical literacy.

Let this be a wake-up call. May our future metrics stay within bounds, and our science stay honest.