From "PDF is Dead" to AI Vibe Reading: Is Research Writing Ready for the LLM Era?

-

A Storm Is Coming for Research Writing

If you’ve been anywhere near X (formerly Twitter) or the AI corners of the internet lately, you’ve seen the latest “brainwave” from Andrej Karpathy: PDF is dead. After reshaping programming with "Vibe Coding," Karpathy has turned his gaze to research communication. His claim is bold:

“In the future, 99% of research attention won’t come from humans — it’ll come from AI. And for AIs, PDF is obsolete.”

What does that mean for CS researchers, peer reviewers, and the whole paper ecosystem? Let’s dive into the discussion, which is rocking the research community right now.

The Problem: Humans Can’t Keep Up

A quick reality check:

- In 2010, 1.9 million scientific articles were indexed globally.

- By 2022, that number hit 3.3 million.

- In 2025, we expect about 3.7 million.

That’s over 10,000 new papers per day. Nobody, not even your caffeine-fueled advisor, can keep up.

Peer reviewers, editors, and even lead authors are overwhelmed. As one biologist and community influencer, Michael Levin, put it:

“Other scientists have the same issue and have no time to read most of my lengthy conceptual papers either. So whom are we writing these papers for?”

AI Vibe Reading: The Next Paradigm

Karpathy’s vision is simple, if radical:

- In the era of Large Language Models (LLMs), AI, not humans, will be the primary reader of scientific output.

- Instead of linear, prose-heavy, human-oriented PDFs, research will move to AI-optimized, structured formats (think of Markdown, Git-style modular content).

- “Vibe Reading” is the new "Vibe Coding": AIs learn and synthesize the scientific landscape at machine speed, not human speed.

What’s Wrong with PDF?

- PDFs are static, unstructured, and “written for people.”

- LLMs need machine-friendly formats to parse, synthesize, and reason over vast troves of knowledge.

- The traditional narrative arc (abstract, intro, methods...) is a bottleneck, especially when AIs can pull insights from structured data, code, and annotations directly.

Community Reactions: A Hive of Debate

This isn’t just Karpathy’s lone howl at the moon — thought leaders and scientists are chiming in:

-

Carlos E. Perez:

“The fundamental problem is that for humans, content is rendered as a linear narrative. But for AI, we could structure it for direct semantic parsing. Are we ready to write for AI first?” -

Kirk Patrick Miller:

“I now write for the AI. It’s the only reader who can keep up!” -

Caroline Rommer:

“PDFs are soooo XX century. A lot of work goes into puffing up introductions just for editors and referees. Dispense with all that crap!”

The consensus: We are drowning in papers. Only AI can swim fast enough.

What Would AI-Native Research Look Like?

Picture this future:

- Papers as modular, API-like objects: Each claim, experiment, or dataset is a block, easily referenced, remixed, or updated.

- Metadata everywhere: Concepts, methods, and results are annotated, hyperlinked, and ready for LLMs to extract and reason over.

- Living documents: No more “camera-ready” PDFs, because research should be continuously versioned, open to peer review and AI audit.

- Machine and human co-readership: Scientists focus on synthesis and big ideas, while AIs scan, summarize, and cross-link at scale.

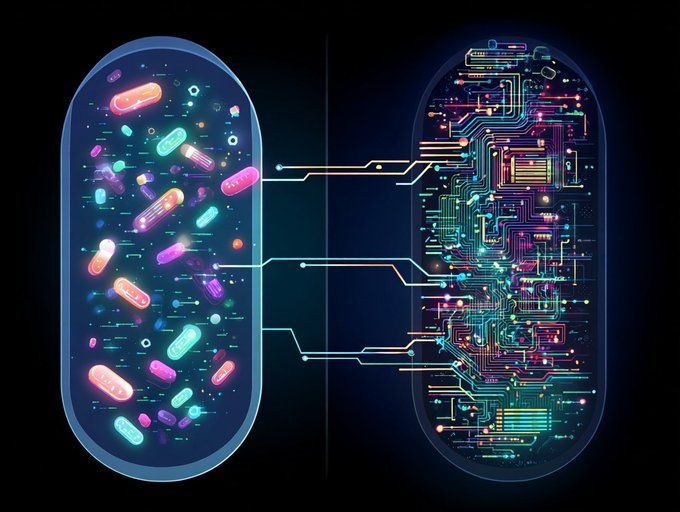

How to build a thriving open source community by writing code like bacteria do 🦠. Bacterial code (genomes) are:

- small (each line of code costs energy)

- modular (organized into groups of swappable operons)

- self-contained (easily "copy paste-able" via horizontal gene transfer)

If chunks of code are small, modular, self-contained and trivial to copy-and-paste, the community can thrive via horizontal gene transfer. For any function (gene) or class (operon) that you write: can you imagine someone going "yoink" without knowing the rest of your code or having to import anything new, to gain a benefit? Could your code be a trending GitHub gist?

This coding style guide has allowed bacteria to colonize every ecological nook from cold to hot to acidic or alkaline in the depths of the Earth and the vacuum of space, along with an insane diversity of carbon anabolism, energy metabolism, etc. It excels at rapid prototyping but... it can't build complex life. By comparison, the eukaryotic genome is a significantly larger, more complex, organized and coupled monorepo. Significantly less inventive but necessary for complex life - for building entire organs and coordinating their activity. With our advantage of intelligent design, it should possible to take advantage of both. Build a eukaryotic monorepo backbone if you have to, but maximize bacterial DNA.

Karpathy’s “bacterial code genome” analogy: modular, self-contained, and horizontally transferable research objects

Karpathy’s “bacterial code genome” analogy: modular, self-contained, and horizontally transferable research objectsImagining the PDF Replacement: Modular Research Genomes

So, what could actually replace the humble PDF? Drawing from Karpathy’s “bacterial code genome” analogy, the answer lies in modular, self-contained, and swappable “research objects”. Just as bacterial genomes are made up of small, modular operons that can be recombined and transferred, research outputs could become structured, composable “knowledge genes” for AIs to assemble and interpret.

A Concrete Vision (out of many ...)

- Project-Based Markdown Repositories:

Each paper becomes a repository: a directory of Markdown files, datasets, code, and metadata.- Every claim, experiment, and figure lives as a standalone, referenced module.

- All elements are machine-readable, versioned, and annotated for both humans and AIs.

- Standardized Metadata Blocks:

Just like gene annotations, each section (methods, results, claims) is tagged with rich semantic metadata, enabling granular search, summarization, and integration. - API-Accessible Knowledge:

Instead of uploading static PDFs, researchers “publish” APIs or endpoints that AIs can query, remix, and update, turning research into a living, evolvable network of knowledge blocks. - Horizontal Knowledge Transfer:

New “knowledge genes” (modules) can be copied or imported into other works, tracked for provenance and impact, mirroring horizontal gene transfer in bacterial evolution.

In this world, the next “paper” is less a linear story and more a dynamic, remixable collection of knowledge modules, which is built for AIs to learn, reason, and innovate with at massive scale.

Peer Review in an AI-First World

This is where it gets truly interesting for CS researchers and peer reviewers:

- Peer review will become AI-augmented: LLMs will pre-filter, summarize, and highlight anomalies or potential fraud.

- Quality control at scale: Automated tools will score clarity, reproducibility, and novelty—leaving humans to evaluate creativity and real-world impact.

- Dynamic, ongoing review: “Set it and forget it” preprints are replaced by evolving, continuously assessed research objects, tracked for influence and correctness.

Ready or Not, Change Is Here

The days of the PDF are numbered. As the volume of scientific output explodes and AI becomes the primary reader, our entire research communication and peer review ecosystem will need to evolve:

- Embrace structured, AI-optimized formats for your next paper.

- Think in modular, remixable objects, not linear stories.

- Prepare for a peer review process that is continuous, automated, and increasingly AI-driven.

The new mantra?

“Paper is Cheap, Show Me the Thoughts!”

How Will Peer Review Evolve?

- AI will become the first reviewer: Surfacing issues, inconsistencies, and connections across millions of works.

- Reviewers become curators and mentors: Guiding AIs and shaping the research landscape, focusing on the human elements — intuition, synthesis, ethics.

- New roles emerge: From “Prompt Engineers” for research summarization to “AI Peer Review Moderators.”

Call to Action

CS researchers:

Start thinking not just about how you write for humans, but how your work can be read, understood, and improved by AIs.

The next era of scientific communication (and peer review) belongs to those who adapt early.

What do you think — will you write your next paper for humans, or for AIs?