Almost rejected ICLR paper for "citation omission" — and then became a spotlight?

-

A tale of research drama, rebuttal redemption, and the spotlight of glory

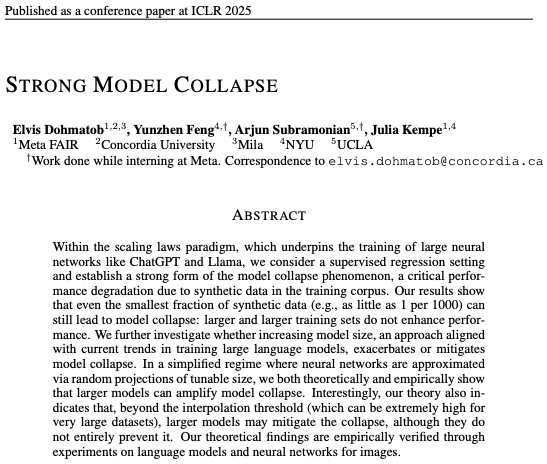

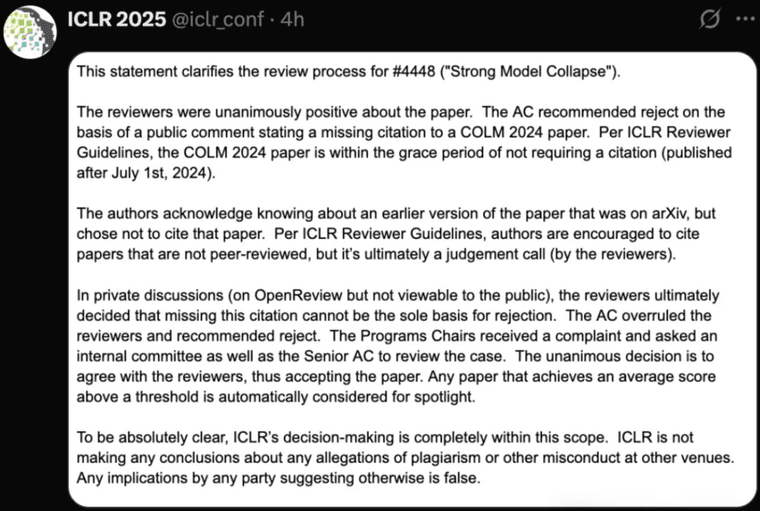

According to report from 新智元 on April 13, 2025 , an ICLR 2025 paper titled "Strong Model Collapse" nearly got rejected (despite unanimously positive reviews) simply because it didn’t cite a COLM 2024 paper. The area chair overruled reviewers and recommended rejection.

Fortunately, the authors filed a successful rebuttal, and not only was the paper accepted, it became a spotlight paper. This high-stakes case also involved serious allegations of academic misconduct, raised tensions in the open-review ecosystem, and even caught the attention of Turing Award winner Yann LeCun.

Background: the controversy begins

The paper Strong Model Collapse, authored by researchers from Meta, NYU, and UCLA, made a bold claim:

Just 1% synthetic data can cause catastrophic failure in AI models.

The research gained early traction on arXiv and quickly stirred debate in the community. But when submitted to ICLR 2025, things took an unexpected turn:

- All reviewers gave positive feedback.

- However, the area chair recommended rejection, citing the authors’ failure to reference a paper published at COLM 2024.

This rejection, based solely on citation omission, triggered protests from the authors. ICLR investigated and ultimately sided with the reviewers, reversing the area chair’s decision and accepting the paper as spotlight.

The accusation: “deliberate suppression of prior work”

The missing citation was a COLM 2024 paper by Rylan Schaeffer, a PhD student at Stanford. Schaeffer publicly accused the ICLR authors of:

- Knowingly ignoring prior work that contradicted their conclusions.

- Borrowing methodological ideas without attribution.

- Using synthetic data while insulting prior research in the same domain.

He called it a case of “academic misconduct” and demanded that ICLR take action. On OpenReview, he rallied support for retracting the paper.

The rebuttal: “you copied our work using AI!”

Lead author Elvis Dohmatob didn’t stay silent. In a post on X (Twitter), he hit back, accusing Schaeffer of:

- Generating his COLM 2024 paper using LLMs with Strong Model Collapse as the prompt.

- Failing to acknowledge feedback provided in earlier discussions.

- Ignoring IRB requirements and acting unethically.

Julia Kempe (NYU, co-author of the ICLR paper) added fuel to the fire, revealing:

- Schaeffer and his collaborators had reached out for feedback but then used those responses without proper credit.

- One co-author of the COLM paper admitted the work was “rushed” and “low quality.”

- Even Schaeffer’s advisor had limited involvement in the paper.

Defining model collapse: where the theories diverge

The heart of the dispute? How to define model collapse.

- ICLR paper (Kempe et al.): defines model collapse as a significant drop in performance caused by synthetic data.

- COLM paper (Schaeffer et al.): defines it as recursive degradation over multiple training iterations.

Julia Kempe’s team argues that only their definition reflects real-world issues, where performance gaps between synthetic and real data matter the most.

They further argue that:

- The COLM paper doesn’t propose a practical solution.

- The mathematical contribution is trivial and misleading.

- The “new theorem” is just a slight variation of earlier work published by Kempe’s team.

Outcome and community reactions

ICLR 2025’s committee upheld the reviewers’ original positive decision. The paper was accepted and selected as a spotlight, which is reserved for the top ~5% of submissions.

The broader community responded with mixed feelings:

- Some praised ICLR for listening to authors and reviewers over area chair bias.

- Others criticized the drama as evidence of breakdowns in the open review system.

- Yann LeCun voiced his support for the ICLR authors, defending their definition and contribution.

Implication for other researchers

- Citation omissions, especially in competitive fields, can become flashpoints for controversy.

- Rebuttals matter: they can overturn rejections and even earn spotlight status.

- Definitions in scientific papers are not just semantics, they can shape the entire discourse.

- The rise of LLMs in research raises new questions about attribution, plagiarism, and paper generation.

references

Let us know what you think. Was the initial rejection fair? Should citation issues override technical merit? Share your thoughts below!

-

I believe this is not the only case, have seen more of alike.