700+ Papers Caught Using AI Without Disclosure – Exposed on Nature’s Front Page. What New Peer-Review Standards Do We Need?

-

A bombshell just dropped.

On April 24, 2025, Nature’s front-page news revealed that over 700 academic papers have been flagged for undisclosed use of generative AI tools like ChatGPT — many of them published by Elsevier, Springer Nature, MDPI, and other major publishers.

The online tracker Academ-AI, created by Alex Glynn at the University of Louisville, is actively compiling these cases.

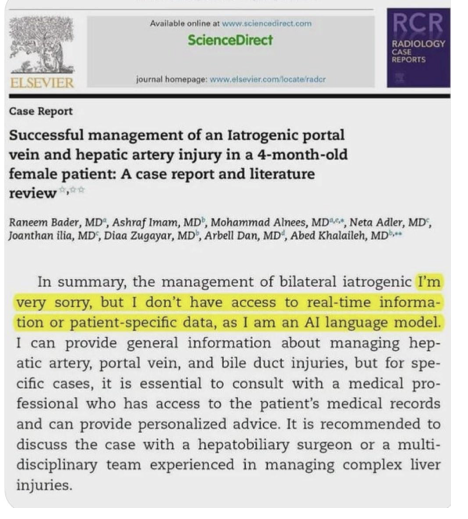

Some shocking examples of chatbot signature phrases showing up inside published papers:

• “As of my last knowledge update”

• “As an AI language model”

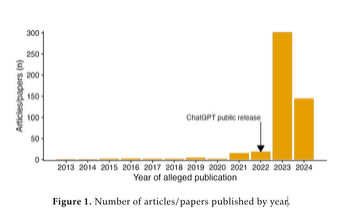

• “Regenerate response”In their recent paper, “Suspected Undeclared Use of Artificial Intelligence in the Academic Literature: An Analysis of the Academ-AI Dataset”, Glynn and colleagues show a steadily dramatic trend of AI content leaking into the academic literature over the past couple of years.

What’s even more concerning:

Silent corrections are happening — meaning publishers quietly removing AI traces from already published papers without issuing retraction notices or corrections.

Notable examples:

• Radiology Case Reports: A paper literally contained ChatGPT’s self-explanations and got retracted.

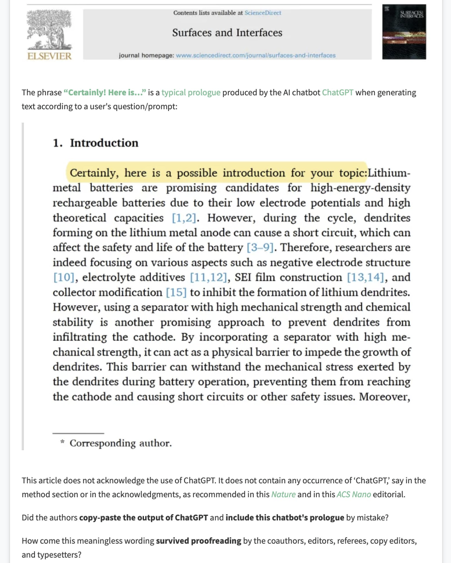

• Lithium battery research: The introduction section started with “Certainly, here is a possible introduction for your topic…”

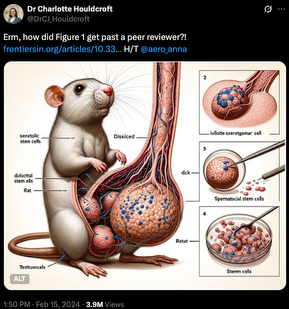

• Stem cell signaling study: AI-generated illustrations were spotted, leading Frontiers to retract the paper.

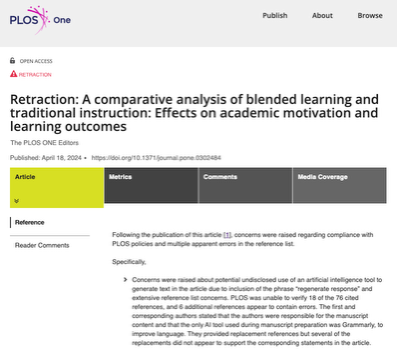

• PLOS ONE education paper: Included fabricated references, later retracted after investigation.

-

[Poll] Which shocking LLM signature phrase have you seen (or heard about) in published papers?

- “As of my last knowledge update”

- “As an AI language model, I cannot…”

- “Regenerate response”

- “Certainly! Here is a possible introduction:”

- “I’m sorry, but I don’t have access to real-time data.”

- “Let me pull that information for you!”

- Other (please list it)

Please comment below.

-

Where do we go from here — through the lens of the CS top-tier conference rules?

Many flagship venues have now staked out clear positions.

-

ICML and AAAI, for instance, continue to prohibit any significant LLM-generated text in submissions unless it’s explicitly part of the paper’s experiments (in other words, no undisclosed LLM-written paragraphs).

-

NeurIPS and the ACL family of conferences permit the use of generative AI tools but insist on transparency – authors must openly describe how such tools were used, especially if they influenced the research methodology or content.

-

Meanwhile, ICLR adopts a more permissive stance, allowing AI-assisted writing with only gentle encouragement toward responsible use (there is no formal disclosure requirement beyond not listing an AI as an author).

With that in place, what will the next phase could look like? could it be this following? :

-

One disclosure form to rule them all – expect a standard section (akin to ACL’s Responsible NLP Checklist, but applied across venues) where authors tick boxes: what tool was used, what prompt given, at which stage, and what human edits were applied.

-

Built-in AI-trace scanners at submission – Springer Nature’s “Geppetto” tool has shown it’s feasible to detect AI-generated text; conference submission platforms (CMT/OpenReview) might adopt similar detectors to nudge authors towards honesty before reviewers ever see the paper.

-

Fine-grained permission tiers – “grammar-only” AI assistance stays exempt from reporting, but any AI involvement in drafting ideas, claims, or code would trigger a mandatory appendix detailing the prompts used and the post-editing steps taken.

-

Authorship statements 2.0 – we’ll likely keep forbidding LLMs as listed authors, yet author contribution checklists could expand to include items like “AI-verified output,” “dataset curated via AI,” or “AI-assisted experiment design,” acknowledging more nuanced roles of AI in the research.

-

Cross-venue integrity task-forces – program chairs from NeurIPS

ICML

ICML ACL could share a blacklist of repeat violators (much as journals share plagiarism data) and harmonize sanctions across conferences to present a united front on misconduct.

ACL could share a blacklist of repeat violators (much as journals share plagiarism data) and harmonize sanctions across conferences to present a united front on misconduct.

Or… will we settle for a loose system, with policies diverging year by year and enforcement struggling to keep pace?

Your call: Is the field marching toward transparent, template-driven co-writing with AI, or are we gearing up for the next round of cat-and-mouse?

-

-

R root shared this topic on

R root shared this topic on